Computer Vision - Structure From Motion

December 2018

PYTHON

OPENCV

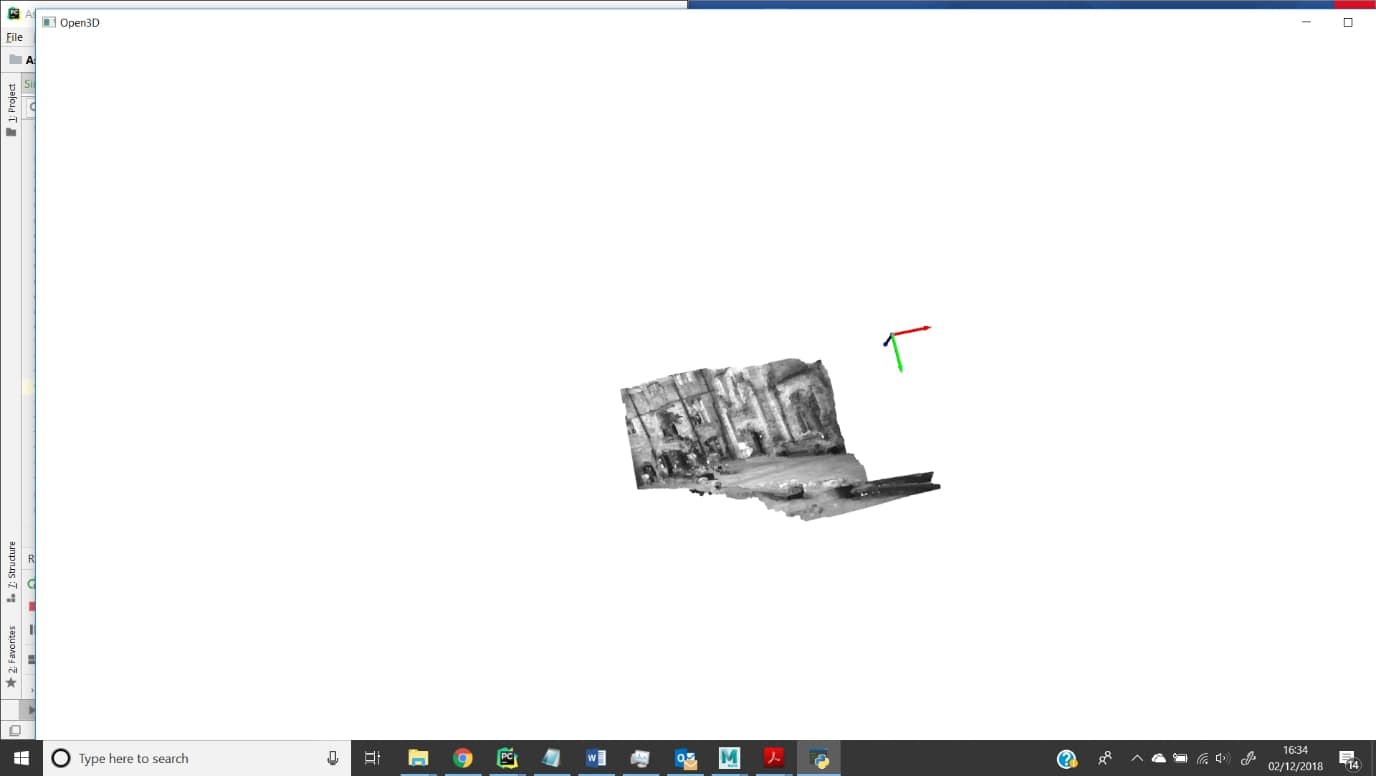

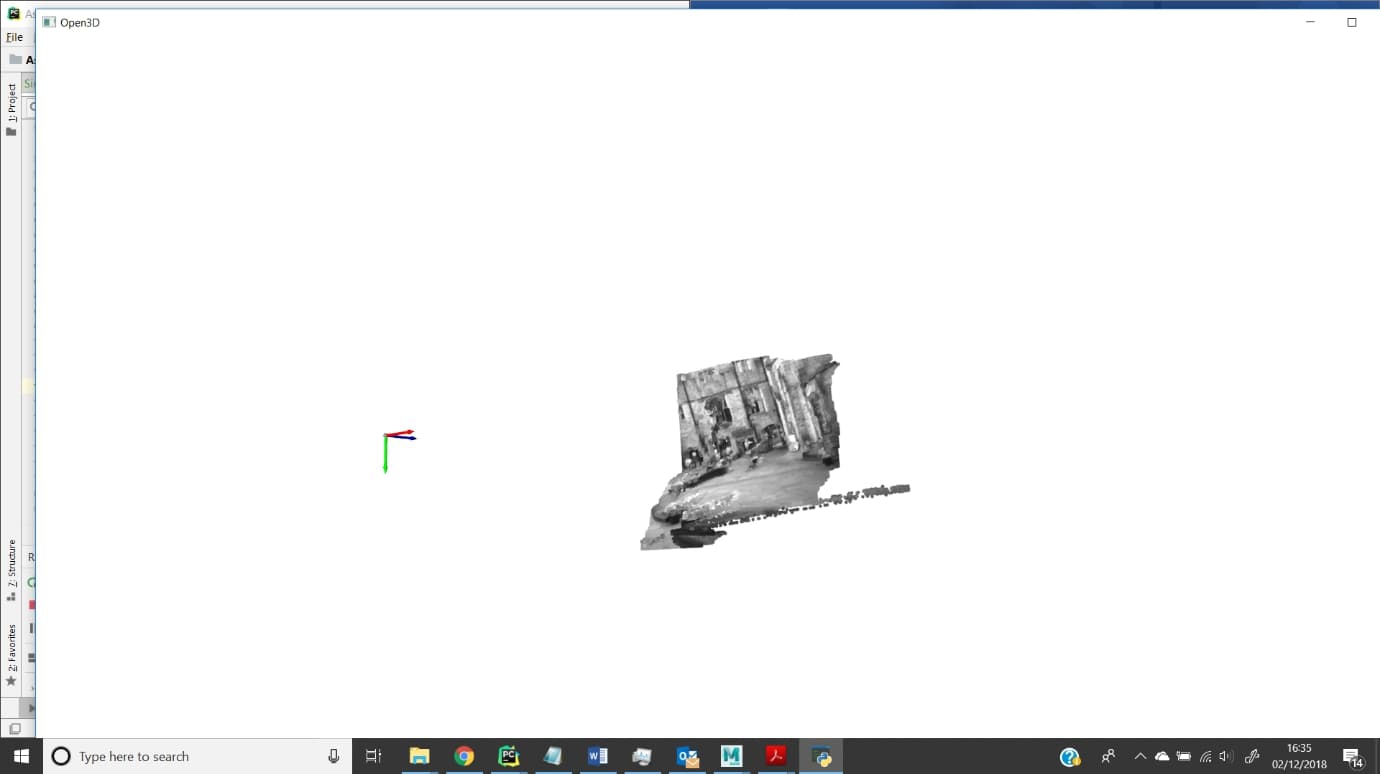

This project was to use state-of-the-art computer vision techniques to create a 3D model of an environment from a sequence of 2D images taken from a car driving around a city. The motivation is to explore how self-driving cars may be able to perceive a 3D world from their 2D images which may be able to inform decisions such as where to drive or what certain objects are in the scene.

What I Learnt:

- How to use the OpenCV python library to perform image processing/computer vision techniques on images and videos

- How to implement a state-of-the-art Structure-From-Motion algorithm

Algorithm:

Assuming two images taken in sequence, the basic algorithm works as follows:

- Features are extracted from each image using a sophisticated algorithm such as SURF

- Features are matched between the images to form feature-correspondances

- The Essential Matrix is found using these matched features (Note:The essential matrix basically encodes the transformation between two images, see more here)

- The RT Matrix, describing the rotation and translation between the two positions from which the camera took the images are determined by decomposing the Essential Matrix

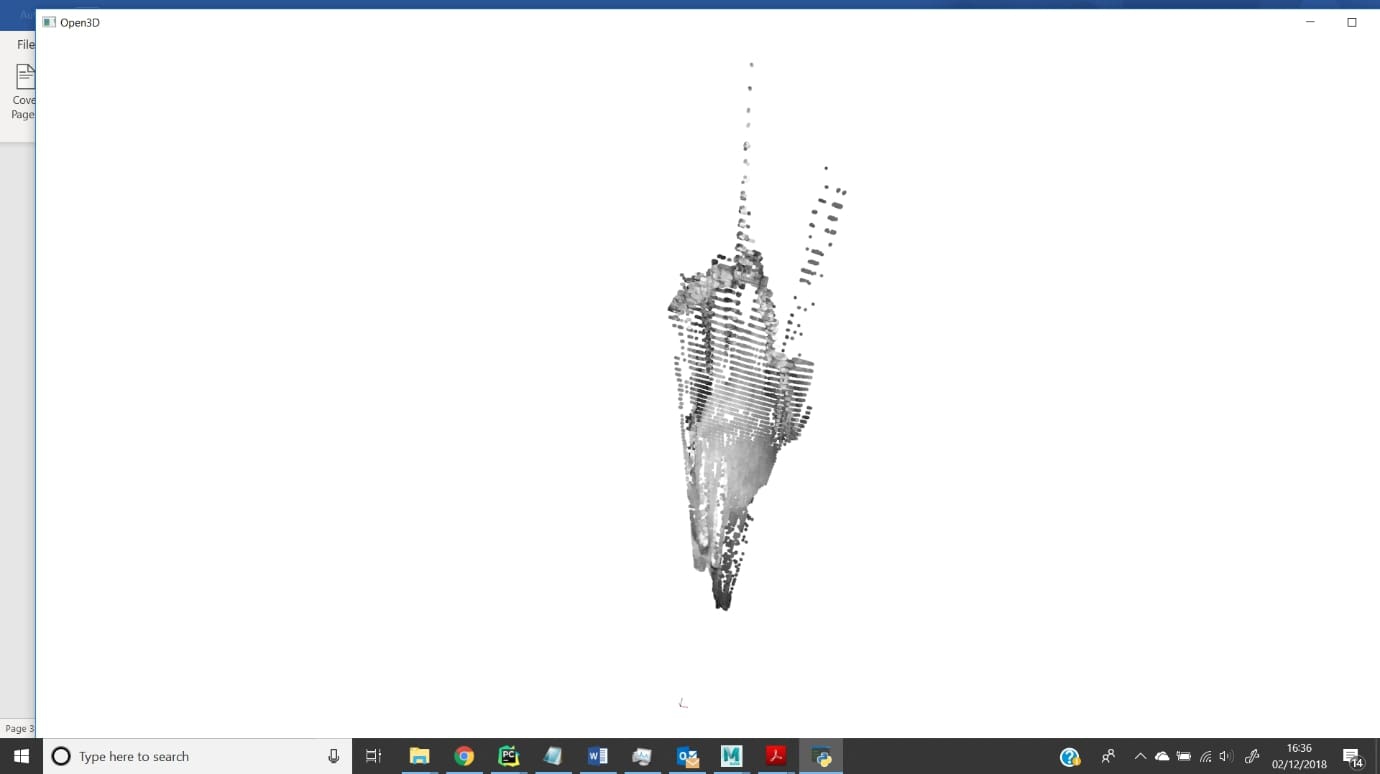

- At this point a sparse point cloud of 3D points can be created using the rotation and translation found previously. The following two steps are optional

- The RT matrix can be used to create an accurate disparity map, assigning a depth value to every point in the image

- A dense point cloud can be created using this disparity map.

×

![]()